In a previous episode, I ran quite a few tests and experiments to understand how to code for an MO5.

I had asked the AI to summarize what we had learned into Markdown files. The underlying idea was to be able to share this experience with my new MO5 projects without having to copy .md files into each repository.

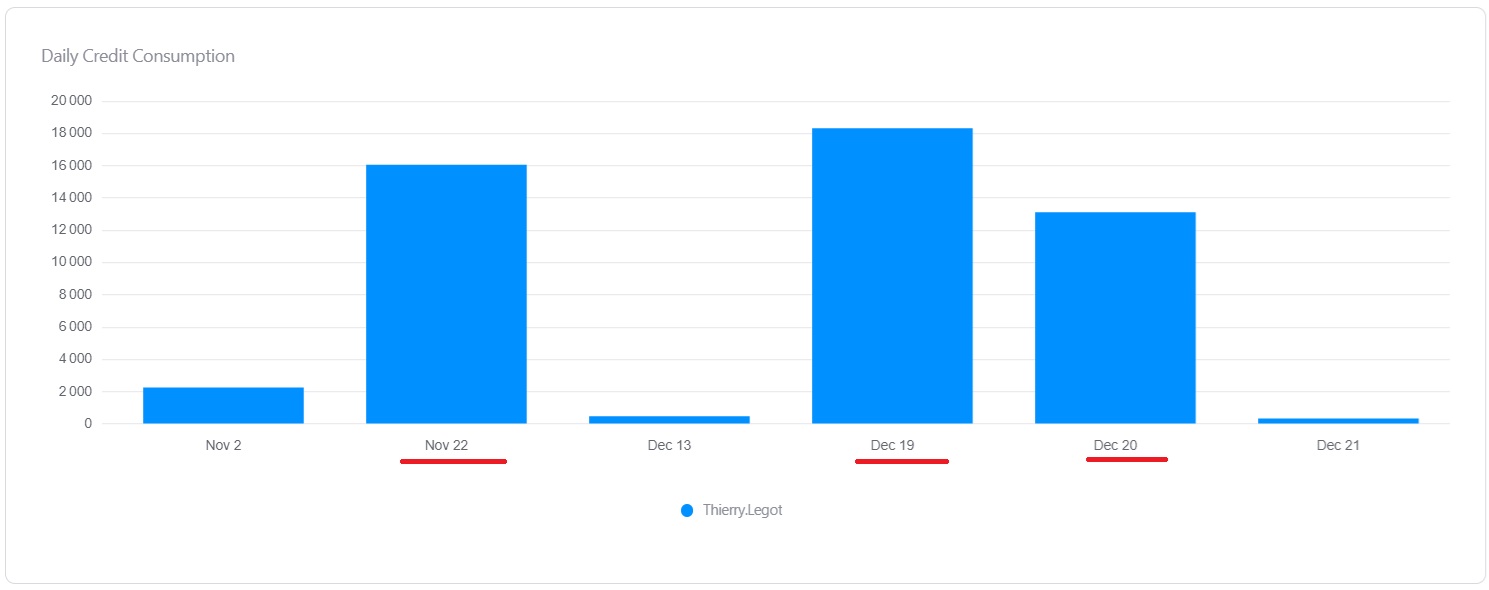

Spoiler alert: vibe coding costs money 😢

The RAG server idea

One simple way to share knowledge and context is through a RAG server.

A RAG server (Retrieval-Augmented Generation) is basically a search API, but one that is able to provide specific context to AI systems (MO5 in my case).

At first, I had a very naïve view of the implementation:

- store documents in a database

- perform a keyword search (like

SQL LIKE) - return the passages containing those words through an API

After doing some research, this naïve vision turned out to be full of drawbacks.

Why keyword search doesn’t work

- No semantic understanding: if the user asks “How do I authenticate a user?” but the document talks about session management and JWT tokens, no words match—even though the content is relevant.

- Same word, different meaning: the meaning of “public key” is not the same in cryptography, networking, or databases. Lexical search cannot disambiguate context.

- Fragile to rephrasing: plurals, synonyms, paraphrases, typos, etc.

In short, keyword search does not understand meaning.

It fails as soon as you rephrase or express a concept differently. It’s clearly not the right model.

Chunking, embeddings, and black magic

After doing some research on RAG servers, you quickly see the terms chunks, embeddings, and cosine similarity appear everywhere.

Before working on this project, I had no idea these concepts even existed (and yet we use them every day).

A RAG system:

- uses embeddings to represent the meaning of text as vectors

- compares these vectors using cosine similarity to retrieve semantically close passages, even without identical words

- relies on chunking to index coherent pieces rather than entire documents

Result:

- better context

- less noise

- fewer hallucinations

- answers far more reliable than with simple text search

For me, the truly magical part is embeddings: giving a numerical representation to the meaning of a text.

It may be obvious to some, but not to me. Just imagining that someone managed to mathematically formalize the meaning of a sentence, I find that simply mind-blowing 😄

OpenAI or local?

While discussing my project with ChatGPT, it obviously recommended interfacing with OpenAI for embeddings.

Even though using an AI service is more performant (better semantics, faster, multilingual), I wanted to stay as cheap as possible.

My goal is to deploy this API on the Internet so it can be used by the community. If it ever became truly popular, AI costs could quickly limit my ambitions.

The target architecture must be able to abstract the embeddings implementation:

- a local, “home-made” provider

- or an AI API like OpenAI / Azure OpenAI (you never know, I might change my mind)

Local embeddings

TF-IDF

TF-IDF (Term Frequency – Inverse Document Frequency) is a classic technique for generating embeddings.

In short:

- TF: how often does a word appear in the text?

- IDF: is this word rare or common across all documents?

A word that is rare overall but present in a document is considered important for its meaning.

Advantages:

- everything is computed locally

- no external API

- no AI cost

Neural model

Another option: a pre-trained neural model.

The idea:

- a deep learning model transforms text into a dense vector

- semantically similar texts have similar vectors, even with different words

This is usually more accurate in terms of relevance, but:

- heavier

- often based on Python scripts

- slower response times

Deployment on Raspberry Pi

At the end of November, I started coding with Augment (Indie Plan subscription at $20/month, 40,000 credits).

API setup, unit tests, TF-IDF implementation, neural model—everything was going smoothly.

After creating my NAS, I moved the sources to my new server and wanted to deploy the API there.

I asked Augment to create a Docker image for deployment on a Raspberry Pi (I deployed the TF-IDF implementation).

We spent the evening together:

- unsuitable images

- API bugs

- configuration issues

But around quarter to pumpkin (11:45 PM for those who don’t get the Cinderella reference), everything was working and deployed on the NAS.

Response time: ~50 ms. Very decent for a Raspberry Pi.

The neural model… and the cold shower

The next day, I thought that more relevant results would be better.

So I asked Augment to deploy the neural model.

The entire afternoon was spent on it:

- incompatible images

- Python version issues

- bugs in the C# implementation

- deployment time

When I finally looked up, it was dark outside. It was a little after 6 PM.

Good news:

- everything worked

- more relevant answers

Bad news:

- 40 seconds per response

Conclusion: hosting a neural model on a Raspberry Pi is not the idea of the century…

Rollback to the simple, fast, and efficient model.

The real cost of vibe coding

To build this API, I delegated almost everything to Augment.

I pushed vibe coding very far, even asking it to run compilation and deployment commands for me (peak laziness).

Technically, it works very well.

The project is functional.

But the downside:

- no pride: it’s not really my work

- I didn’t learn anything deeply

- vibe coding is expensive: in one evening and one afternoon, I almost burned through my entire monthly quota

I estimate having spent around 47,000 credits on this project (over the course of three half-days, while my monthly quota is 40,000 credits), and that’s not even mentioning my carbon footprint…

Conclusion

When moving to production, I will probably need to rely on an AI like OpenAI or Azure OpenAI to achieve better semantic analysis performance. You can’t do everything on your own, and sometimes you have to accept delegating to the professionals 😄.

A functional project, technically successful, but with a rather bitter personal aftertaste. Next time, I’ll be more involved and won’t let the AI do everything. After all, it’s my project, not its own 😄